If you've built anything with LLMs, you know the frustration. Your prompts live in Google Docs, your team reviews them in Slack, someone copy-pastes the final version into code, and inevitably something breaks silently.

Every handoff is a potential disaster waiting to happen.

Alex felt this pain firsthand while building his previous startup, an AI tutor. Mixed product and engineering teams were wasting days shipping each prompt because the workflow was completely broken.

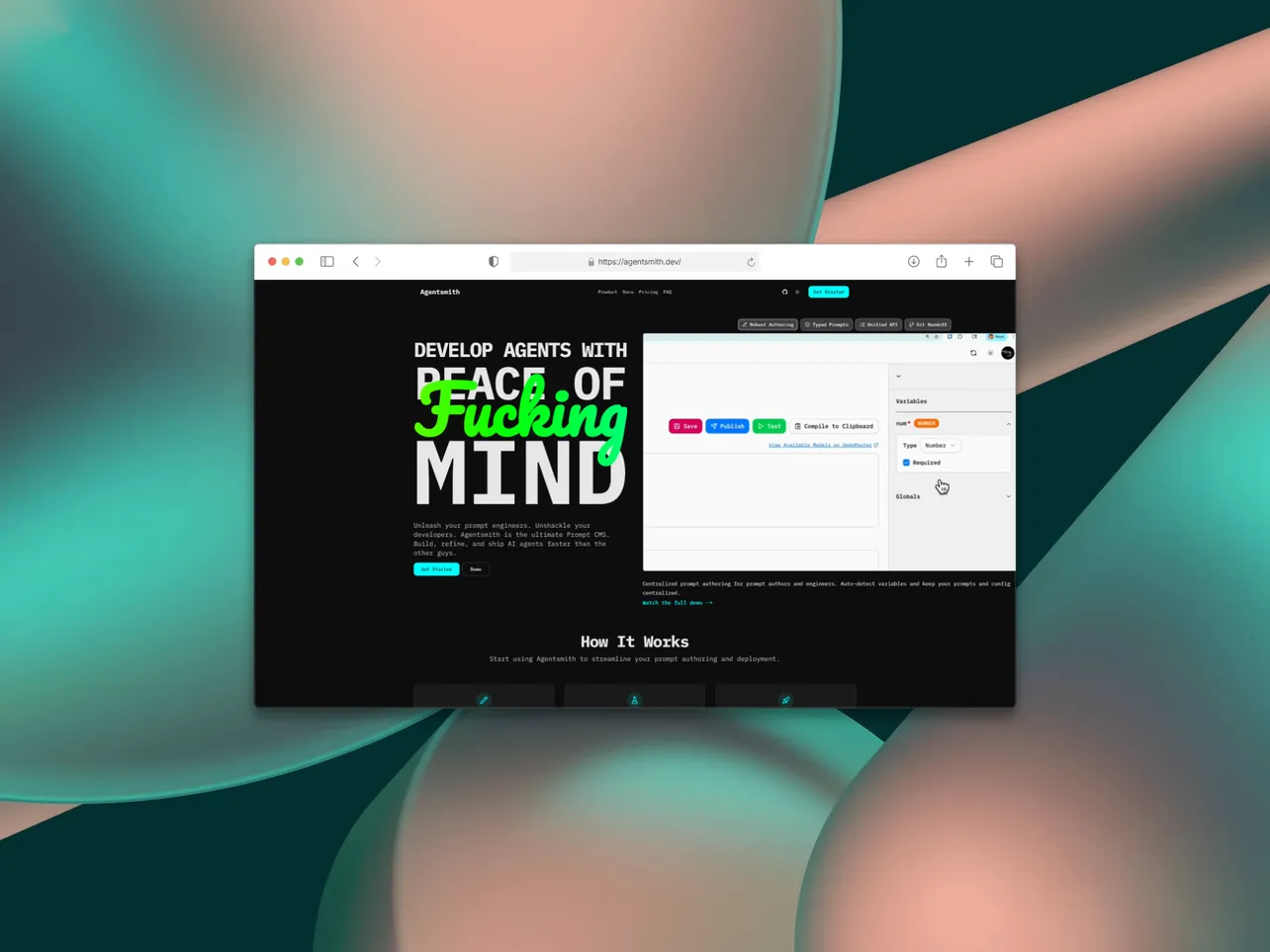

That's when he decided to build Agentsmith, an open-source prompt authoring and management platform designed to streamline iteration and integration.

The timing couldn't be better.

The LLMOps market is exploding, jumping from $4.35 billion in 2023 to a projected $13.95 billion by 2030 at a 21.3% CAGR. Companies are finally realizing that managing LLM applications requires proper tooling, not just hoping things work.

From AI Tutor to Prompt Management Pioneer

Q: What problem does your product solve?

Alex: Mixed product + engineering teams waste days shipping each prompt because drafts live in docs, reviews happen in Slack, and final text is copy-pasted into code. Every hand-off risks silent prompt errors. Agentsmith provides an open source prompt authoring and management to streamline iteration and integration.

Q: What inspired you to start this?

Alex: I've spent the past couple of years working with LLMs and I built Agentsmith to alleviate the pains I felt. 1. Reliability: prompts are not considered first class citizens in code.

Templating, variables, and configuration are not hardened which easily leads to LLM calls silently failing. 2. Collaboration: iterating and sharing prompts between teammates can get messy fast without a single source of truth. 3.

Authoring: writing, testing, saving, and handing off prompts can all happen in different apps. Key changes can be lost in translation. 4. Observability: you can't improve what you can't see. Without correlating prompts to outcomes you won't know where the problems are and in which direction to iterate.

His experience building an AI tutor gave him deep insight into these workflow problems. But the breakthrough moment came when he realized he was overthinking the launch.

Q: What changed everything?

Alex: So far I was just being and building, realized I was just being a bitch by not launching. So here we are.

The LLMOps Market Opportunity

The numbers behind Alex's timing are compelling:

Market Explosion: The global LLMOps software market was valued at $4.35 billion in 2023 and is projected to reach $13.95 billion by 2030, with a CAGR of 21.3%. This isn't a gradual growth story, it's an explosion driven by enterprises scaling AI across their operations.

Enterprise Adoption: Companies are moving from experimental AI to production deployments, creating demand for proper operational tooling. Organizations need infrastructure that can handle prompt management, versioning, testing, and deployment at scale.

Developer Pain Points: The market research confirms what Alex experienced. Teams struggle with:

- Prompt versioning and collaboration

- Integration between authoring and deployment environments

- Observability and performance tracking

- Managing different models and providers

What Makes Agentsmith Different

Most LLM tools focus on the sexy stuff like training models or fancy UIs. Agentsmith tackles the unsexy but critical infrastructure that every AI team needs.

Core Features:

- Prompt CMS for centralized authoring and management

- GitHub synchronization via pull requests for version control

- Type-safe SDK to prevent errors in production

- Provider switching to easily test different AI models

- Testing environment to refine prompts before deployment

The platform addresses the four pain points Alex identified from his own experience:

Reliability Problem: Prompts get treated as afterthoughts in code, leading to silent failures when LLM calls break. Agentsmith makes prompts first-class citizens with proper templating and configuration.

Collaboration Mess: Without a single source of truth, teams waste time syncing on prompt changes. Agentsmith provides centralized management with proper review workflows.

Authoring Chaos: Writing, testing, and deploying prompts across different tools creates handoff errors. Agentsmith unifies the entire workflow in one platform.

Observability Gap: Teams can't improve what they can't measure. Agentsmith correlates prompts to outcomes so teams know what's working and what needs iteration.

Early Traction and Developer Interest

Q: What's your current traction?

Alex: "Just launched, have a WHOPPING 8 GitHub stars."

While 8 GitHub stars might sound modest, it's actually typical for developer tools in the first weeks after launch.

The real metric for open-source tools is developer adoption and contribution activity, which tends to build gradually as word spreads through the community.

Alex is building in public across multiple channels, positioning himself as a thought leader in the LLMOps space:

Q: Where can people learn more?

Alex:

- X/Twitter: https://x.com/chad_syntax

- GitHub: https://github.com/chad-syntax/agentsmith

- Peerlist: https://peerlist.io/chad_syntax

- Dev.to: https://dev.to/chad_syntax

- LinkedIn: https://www.linkedin.com/in/alex-lanzoni/

- Bluesky: https://bsky.app/profile/chadsyntax.com

Why This Matters in 2025

Three trends are creating perfect conditions for Agentsmith's growth:

"Year of Agents": 2025 is being called the year of AI agents, as LLMs evolve from single-turn responses to multi-step reasoning and tool integration. This complexity makes proper prompt management even more critical.

Enterprise AI Scaling: Companies are moving beyond proof-of-concepts to production deployments. Microsoft reports that some AI developers have slashed time-to-production by 80% using proper tooling and frameworks.

Open Source Momentum: Developer tools often win through open-source adoption first, then monetize through enterprise features. Agentsmith's open-source approach positions it well for grassroots developer adoption.

The Technical Edge

What sets Agentsmith apart isn't just solving workflow problems, but how it solves them:

GitHub Integration: By syncing prompts through pull requests, Agentsmith fits naturally into existing developer workflows. Teams can review prompt changes like code changes.

Type Safety: The TypeScript SDK (with Python coming soon) prevents the silent errors that plague LLM integrations. If a prompt variable changes, the code breaks at build time, not runtime.

Provider Agnostic: Teams can switch between AI providers and models without changing their application code, crucial as the LLM landscape evolves rapidly.

The Bottom Line

Alex's story illustrates a crucial truth about developer tools: sometimes the biggest breakthrough is just shipping. He identified a real problem from his own experience, built a solution, and launched despite his perfectionist tendencies.

Agentsmith addresses fundamental infrastructure problems that every AI team faces. As companies scale their LLM applications from experiments to production systems, proper prompt management becomes essential, not optional.

The LLMOps market is exploding because enterprises are realizing that managing LLMs requires more than just API calls. They need proper tooling for versioning, testing, deployment, and observability. Agentsmith provides exactly that infrastructure in an open-source package that fits developer workflows.

In a market projected to grow 21% annually through 2030, timing matters. Alex launched at the perfect moment when developer pain is highest and enterprise adoption is accelerating.

Ready to fix your prompt management workflow? Check out Agentsmith.dev.